Originally posted at Serverless

AWS provides the means to upload files to an S3 bucket using a pre signed URL. The URL is generated using IAM credentials or a role which has permissions to write to the bucket. A pre signed URL has an expiration time which defines the time when the upload has to be started, after which access is denied. The problem is that the URL can be used multiple times until it expires, and if a malevolent hacker gets their hands on the URL, your bucket may contain some unwanted data.

How then do we prevent the usage of the pre-signed URL after the initial upload?

The following example will leverage CloudFront and Lambda@Edge functions to expire the pre-signed URL when the initial upload starts, preventing the use of the URL

Lambda@Edge functions are similar to AWS Lambda functions, but with a few limitations. The allowed execution time and memory size are smaller than in regular Lambda functions, and no environmental variables can be used.

The example project is made with the Serverless Framework. Let’s go through the basic concept and components.

The Concept

The objective is to ensure that every pre signed URL is only ever used once, and becomes unavailable after the first use.

I had a few different ideas for the implementation until I settled on one that seemed to be the most efficient at achieving our objective.

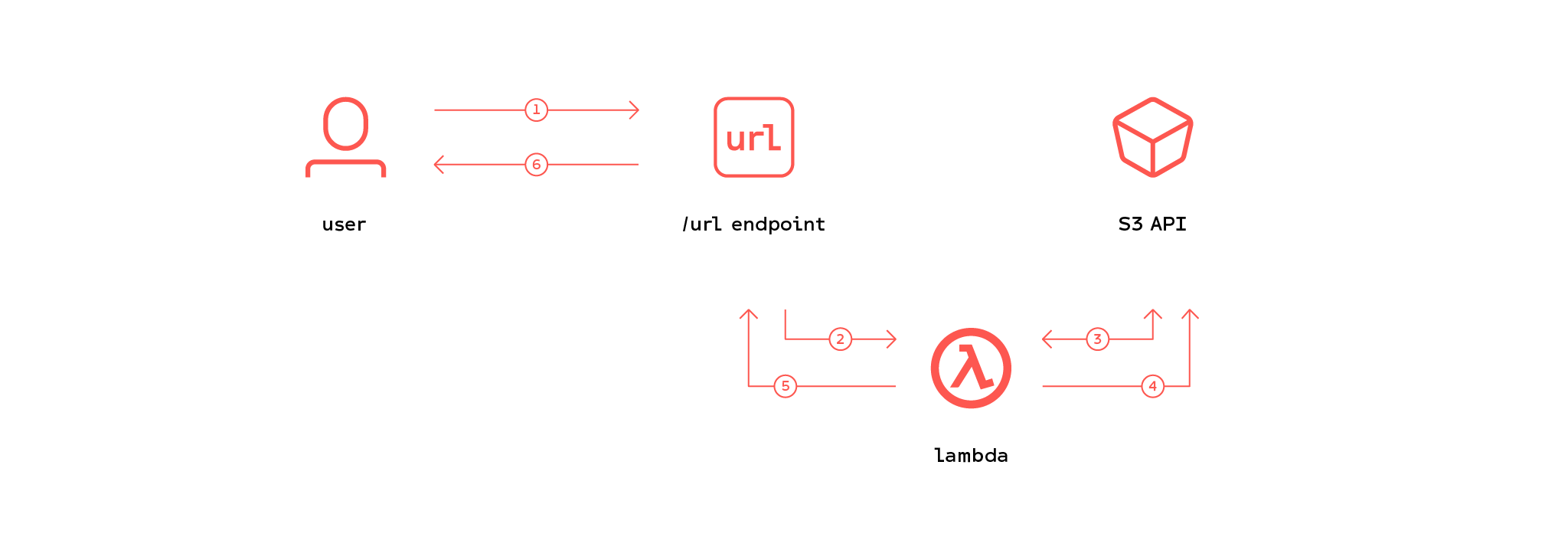

Figure 1. Presigned URL creation

First, the user makes a request to the /url endpoint (step 1, Figure 1). This in turn triggers a lambda function (step 2, Figure 1) which creates a presigned URL using the S3 API (step 3, Figure 1). A hash is then created from the URL and saved to the bucket (step 4, Figure 1) as a valid signature. The Lambda function creates a response which contains the URL (step 5, Figure 1) and returns it to the user (step 6, Figure 1).

Figure 2. Verification of the presigned URL

The user then uses that URL to upload the file (step 1, Figure 2). A Cloudfront viewer request triggers a Lambda function(step 2, Figure 2) which verifies that the hashed URL is indexed as a valid token and is not indexed as an expired token (step 3, Figure 2). If we have a match from both conditions, the current hash is written to the expired signatures index (step 4, Figure 2).

In addition to that, the version of the expired signature object is checked. If this is the first version of this particular expired hash everything is ok (step 5, Figure 2). This check is meant to prevent someone intercepting the original response with a signed URL and using it before the legitimate client has had a chance to.

After all the verifications have successfully passed, the original request is returned to Cloudfront (step 6, Figure 2) and to the bucket (step 7, Figure 2), which then decides if the presigned URL is valid for PUTting the object.

AWS Resources

The S3 bucket will contain the uploaded files and an index of used signatures. There no need for bucket policy, ACLs, or anything else; the bucket is private and cannot be accessed from outside without a pre signed URL.

The generation and invalidation of the signed URLs will happen on the Lambda@Edge functions, which are triggered in the CloudFront’s viewer request stage. Functions have a role which allows them to generate the pre-signed URL, check if the URL hash is in the valid index and add it if not.

The bucket and CloudFront distribution are defined in the resources block of the serverless.yml file. Since we cannot pass configuration values via environment variables (since Lambda@Edge functions cannot access environment variables), the bucket name is stored and fetched from an external json file.

The Cloudfront distribution has its origin set to our S3 bucket, and it has two behaviors; the default is to perform the upload with a pre signed URL, the second supports a URL pattern of /url which will respond with the pre-signed URL that is used for the upload.

The default behavior in the distribution configuration allows all the HTTP methods so that PUT can be used to upload files. S3 allows files up to 5 gigabytes to be uploaded with that method, although it is better to use multipart upload for files bigger than 100 megabytes. For simplicity, this example uses only PUT.

Cloudfront should also forward the query string which contains the signature and token for the upload.

The origin contains only the domain name, which is the bucket name, and id. The S3OriginConfig is an empty object because the bucket will be private. If you want to allow users to view files which are saved to the bucket, the origin access identity can be set.

Lambda Functions

Both of the functions are triggered in the viewer request stage, which is when CloudFront receives the request from the end user (browser, mobile app, and such).

The function which creates the pre-signed URL is straightforward; it uses the AWS SDK to create the URL, stores a hash of the URL to the bucket and returns the URL. I’m using node UUID module to generate a random object key for each upload.

This will return the full URL to the S3 bucket with pre-signed URL as a query string. As Cloudfront is used in front of the bucket, the URL domain must be the domain of the Cloudfront distribution. The path part is parsed from the signed URL using node URL module and CloudFront distribution domain is available in the request headers.

The function that checks whether the current upload is the first one uses the indices of signatures written into that same bucket. The first check is to confirm an entry in the “valid index” and that the “expired index” doesn’t contain the hash. Then the function will continue executing the code. Otherwise, it will return a 403 Forbidden response.

If the entry doesn’t exist, then it will write current filename and signature to index.

Lastly, there is an extra check that fetches the versions of the index key. If it is not the first version, the response is again 403 Forbidden.

If the version id matches the initial version id, Lambda will pass the request on as it is to the origin.

Permissions

The function that creates the presigned URL needs to have s3:putObject permissions. The function that checks if the current upload is the initial upload requires permissions for s3:getObject, s3:putObject, s3:listBucket, and s3:listBucketVersions.

Development and Deployment

Deploying the stack with the Serverless Framework is easy; sls deploy and then wait. And wait. Everything related to Cloudfront takes time. At least 10 minutes. And removal of the replicated functions can take up to 45 minutes. That is a good driver for test driven development. The example project uses jest with a mocked AWS SDK; that way local development is fast and if you make small logic errors, they are caught before deployment.

Bear in mind that Lambda@Edge functions are always deployed to the North Virginia (us-east-1) region. From there they are replicated to edge locations and called from the CDN closest to the client.

Time for a Test Run!

First, determine the domain name of the created distribution, either by logging in to the AWS web console or with the AWS CLI. The following snippet lists all the deployed distributions and shows domain names and comments. The comment field is the same one that is defined as a comment in the Cloudfront resource in serverless.yml. In the example, it is the service name, e.g. dev-presigned-upload.

Pick the domain name from the list and run curl DOMAIN_NAME/url. Copy the response and then run following snippet.

You should get something like this as a response.

Then rerun the same upload snippet, with the same presigned URL, and the response should be the following.

In the latter example, the Lambda function has determined that the pre signed URL has already been used and responded with 403 Forbidden.

Now you have a Cloudfront distribution that creates pre signed URLs for uploading files and verifies that those are not used more than one time.

To secure the endpoint that creates the presigned URL’s, you can create a custom authorizer which validates each request, e.g. using an authorization header or you can use AWS WAF to limit access.

If you have any improvements or corrections related to the code, please open an issue or PR to the repository.

Links to relevant resources