How to monitor AWS account activity with Cloudtrail, Cloudwatch Events and Serverless

Originally posted at Serverless on January 15th, 2018

CloudTrail and CloudWatch Events are two powerful services from AWS that allow you to monitor and react to activity in your account-including changes in resources or attempted API calls.

This can be useful for audit logging or real-time notifications of suspicious or undesirable activity.

In this tutorial, we’ll set up two examples to work with CloudWatch Events and CloudTrail. The first will use standard CloudWatch Events to watch for changes in Parameter Store (SSM) and send notifications to a Slack channel. The second will use custom CloudWatch Events via CloudTrail to monitor for actions to create DynamoDB tables and send notifications.

Setting up

Before we begin, you’ll need the Serverless Framework installed with an AWS account set up.

The examples below will be in Python, but the logic is pretty straightforward. You can rewrite in any language you prefer.

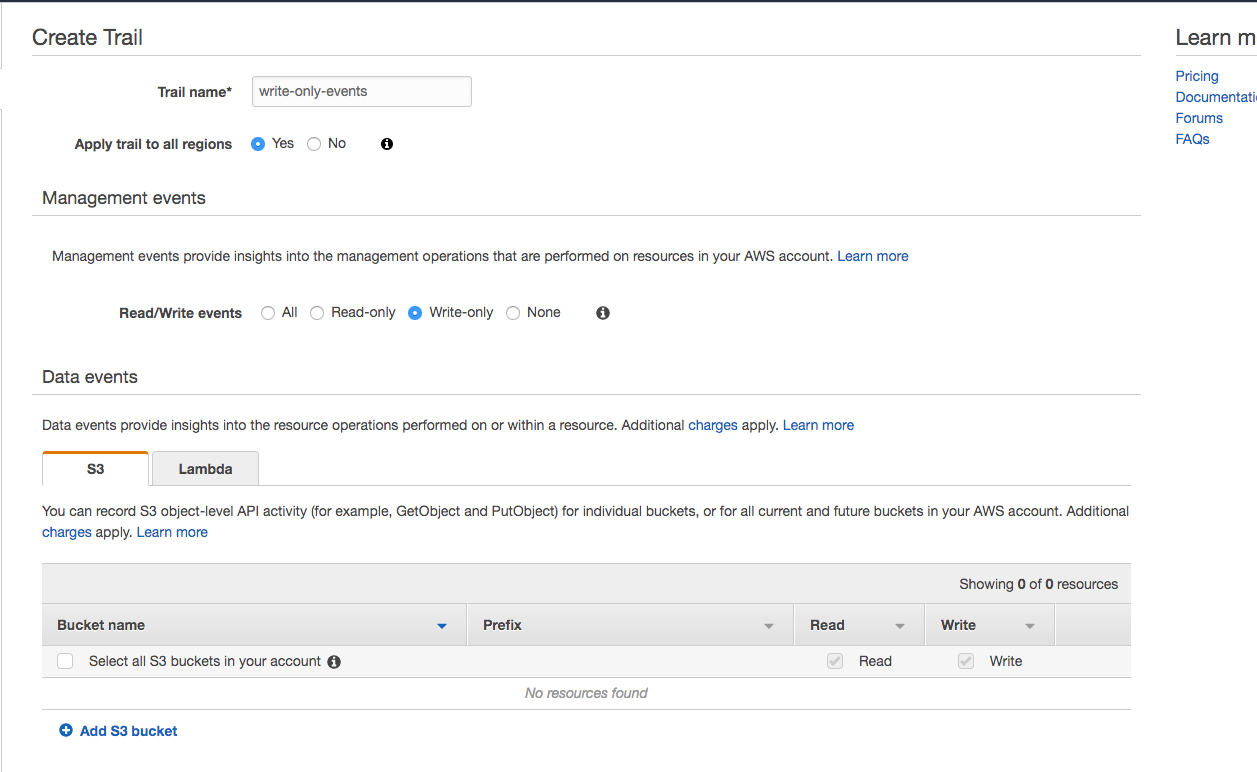

If you want to trigger on custom events using CloudTrail, you’ll need to set up a CloudTrail. In the AWS console, navigate to the CloudTrail service.

Click “Create trail” and configure a trail for “write-only” management events:

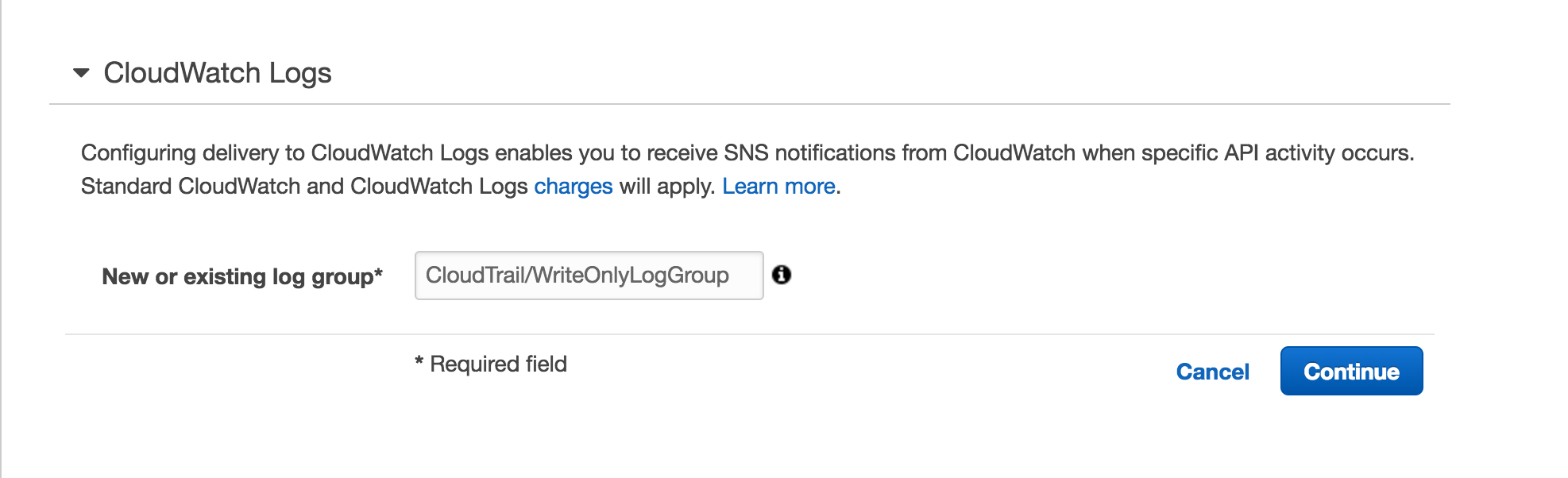

Have your trail write to a Cloudwatch Logs log group so you can subscribe to notifications:

Both examples above post notifications to Slack via the Incoming Webhook app. You’ll need to set up an Incoming Webhook app if you want this to work.

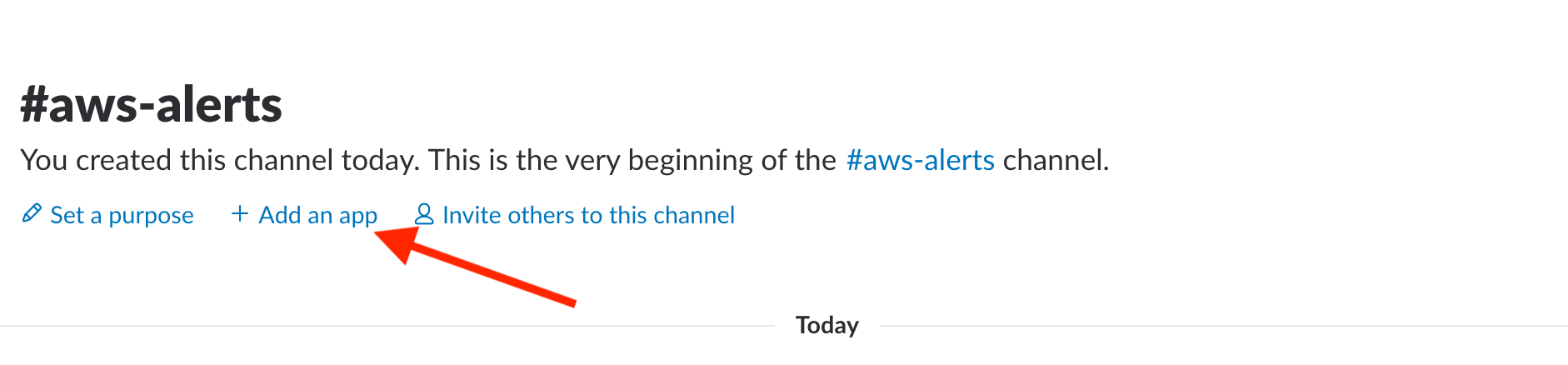

First, create or navigate to the Slack channel where you want to post messages. Click “Add an app”:

In the app search page, search for “Incoming Webhook” and choose to add one. Make sure it’s the room you want.

After you click “Add Incoming Webhooks Integration”, it will show your Webhook URL. This is what you will use in your serverless.yml files for the SLACK_URL variable.

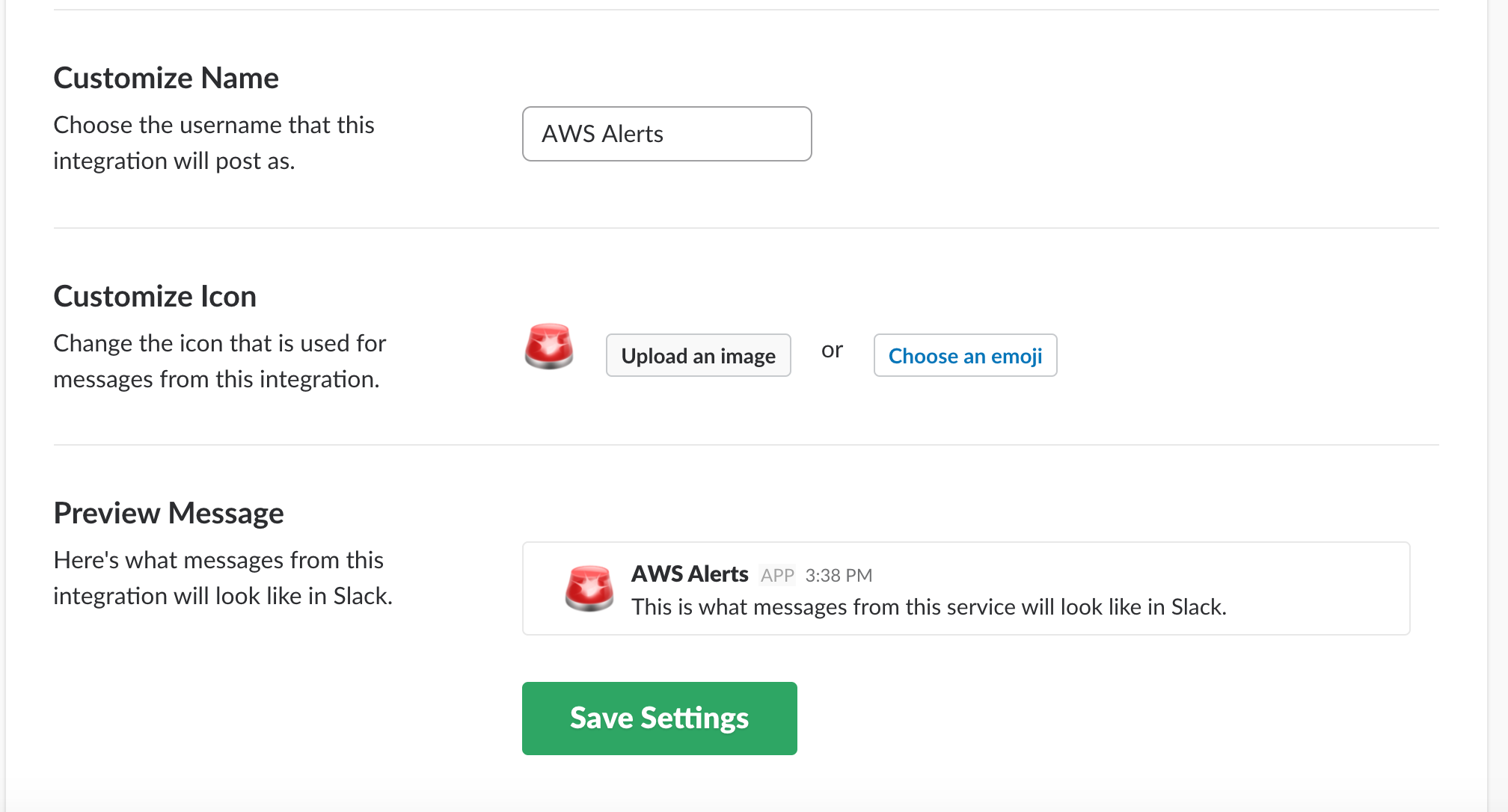

If you want to, you can customize the name and icon of your webhook to make the messages look nicer. Below, I’ve used the “rotating-light” emoji and named my webhook “AWS Alerts”:

With that all set up, let’s build our first integration!

Monitoring Parameter Store Changes

The first example we’ll do will post notifications of from AWS Parameter Store into our Slack channel. Big shout-out to Eric Hammond for inspiring this idea; he’s an AWS expert and a great follow on Twitter:

*#awswishlist Ability to trigger AWS Lambda function when an SSM Parameter Store value changes. That could then run CloudFormation update for stacks that use the parameter - Eric Hammond (@esh) December 29, 2017*

Parameter Store (also called SSM, for Simple Systems Manager) is a way to centrally store configuration, such as API keys, resource identifiers, or other config.

(Check out our previous post on using Parameter Store in your Serverless applications.)

SSM integrates directly with CloudWatch Events to expose certain events when they occur. You can see the full list of CloudWatch Events here. In this example, we are interested in the SSM Parameter Store Change event, which is fired whenever an SSM parameter is changed.

CloudWatch Event subscriptions work by providing a filter pattern to match certain events. If the pattern matches, your subscription will send the matched event to your target.

In this case, our target will be a Lambda function.

Here’s an example SSM Parameter Store Event:

We need to specify which elements of the Event are important to match for our subscription.

There are two elements important here. First, we want the source to equal aws.ssm. Second, we want the detail-type to equal Parameter Store Change. This is narrow enough to exclude events we don't care about, while still capturing all of the events by not specifying filters on the other fields.

The Serverless Framework makes it really easy to subscribe to CloudWatch Events. For the function we want to trigger, we create a cloudWatchEvent event type with a mapping of our filter requirements.

Here’s an example of our serverless.yml:

Notice that the functions block includes our filter from above. There are two other items to note:

We injected our Slack webhook URL into our environment as SLACK_URL. Make sure you update this with your actual webhook URL if you're following along.

We added an IAM statement that gives us access to run the DescribeParameters command in SSM. This will let us enrich the changed parameter event by showing what version of the parameter we’re on and who changed it mostly recently. It does not provide permissions to read the parameter value, so it’s safe to give access to parameters with sensitive keys.

Our serverless.yml says that our function is defined in a handler.py module with a function name of parameter. Let's implement that now.

Put this into your handler.py file:

This function takes the incoming event and assembles it into a format expected by Slack for its webhook. Then, it posts the message to Slack.

Let’s deploy our service:

Then, let’s alter a parameter in SSM to trigger the event:

Note: Make sure you’re running the put-parameter command in the same region that your service is deployed in.

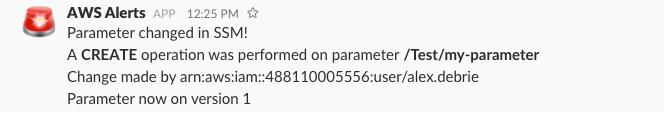

After a few minutes, you should get a notification in your Slack channel:

💥 Awesome!

Monitoring new DynamoDB tables with CloudTrail

In the previous example, we subscribed to SSM Parameter Store events. These events are already provided directly by CloudWatch Events.

However, not all AWS API events are provided by CloudWatch Events. To get access to a broader range of AWS events, we can use CloudTrail.

Before you can use CloudTrail events in CloudWatch Event subscriptions, you’ll need to set up CloudTrail to write a CloudWatch log group. If you need help with this, it’s covered above in the setting up section.

Once you’re set up, you can see the huge list of events supported by CloudTrail event history.

Generally, an event will be supported if it meets both of the following requirements:

It is a state-changing event, rather than a read-only event. Think CreateTable or DeleteTable for DynamoDB, but not DescribeTable.

It is a management-level event, rather than a data-level event. For S3, this means CreateBucket or PutBucketPolicy but not PutObject or DeleteObject.

Note: You can enable data-level events for S3 and Lambda in your CloudTrail configuration if desired. This will trigger many more events, so use carefully.

When configuring a CloudWatch Events subscription for an AWS API call, your pattern will always look something like this:

There will be a source key that will match the particular AWS service you're tracking. The detail-type will be AWS API Call via CloudTrail. Finally, there will be an array of eventName in the detail key that lists 1 or more event names you want to match.

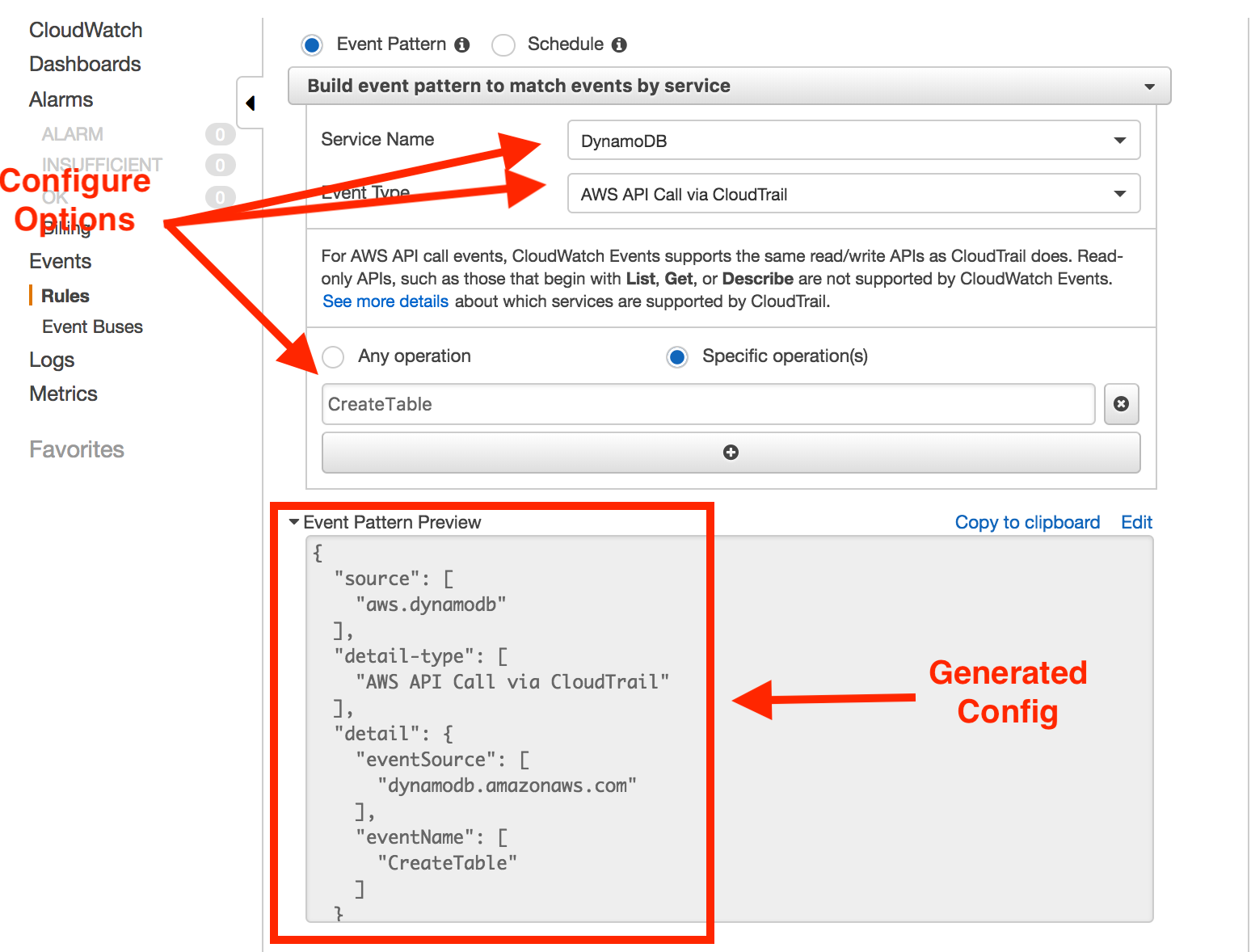

Pro-tip: Use the CloudWatch Rules console to help configure your items the first few times. You can point and click different options and it will show the subscription pattern:

Let’s insert our DynamoDB CreateTable pattern into our serverless.yml:

Very similar to the previous example-we’re setting up our CloudWatch Event subscription and passing in our Slack webhook URL to be used by our function.

Then, implement our function logic in handler.py:

Again, pretty similar to the last example-we’re taking the event, assembling it into a format for Slack messages, then posting to Slack.

Let’s deploy this one:

And then trigger an event by creating a DynamoDB table via the AWS CLI:

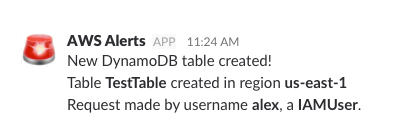

Wait a few moments, and you should get a notification in Slack:

Aw yeah.

You could implement some really cool functionality around this, including calculating and displaying the monthly price of the table based on the provisioned throughput, or making sure all infrastructure provisioning is handled through a particular IAM user (e.g. the credentials used with your CI/CD workflows).

Also, make sure you delete the table so you don’t get charged for it:

Conclusion

There’s a ton of potential for CloudWatch Events, from triggering notifications on suspicious events to performing maintenance work when a new resource is created.

In a future post, I’d like to explore saving all of this CloudTrail events to S3 to allow for efficient querying on historical data-”Who spun up EC2 instance i-afkj49812jfk?” or “Who allowed 0.0.0.0/0 ingress in our database security group?”

If you use this tutorial to do something cool, drop it in the comments!

Originally published at https://www.serverless.com.